Data Fabric Architecture

Data fabric architecture provides a centralized, connected layer of integrated data that democratizes cross-company data access

Data fabric architecture benefits

Why use a data fabric architecture?

Always-fresh

Connected data layer

Unify and continually sync data from different sources and technologies under a common semantic layer

Trusted data

Easily accessible

Enable authorized data consumers to instantly access trusted data through APIs, SQL, streaming, messaging, and search

Lower TCO

Automation yields efficiency

Leverage no-code tooling, generative AI, and knowledge graphs to reduce time and effort to data-driven impact

“Data fabric architecture is key to modernizing data management and integration automation”

Gareth Herschel, Gartner VP Analyst

Modernized data management

K2view data fabric architecture

Metadata-driven automation

Design-time and runtime metadata drive efficiencies in data management

Semantic layer adds business context

Business entity data model creates a common language with the business

Entity-based data integration

Integrating and processing data by entities simplifies trusted data delivery

Open, modular, and flexible

Data fabric integrates with and extends your existing data technology stack

Enterprises love the

#1 data fabric architecture

Ralf Hellebrand

Programme Director, Technology, Vodafone Germany

K2view data fabric has enabled us to evolve our enterprise data management capabilities for the purpose of driving our business agility, IT velocity, and to raise and improve the customer experience.

K2view data product platform

How K2view supports data fabric architecture

Democratize data access

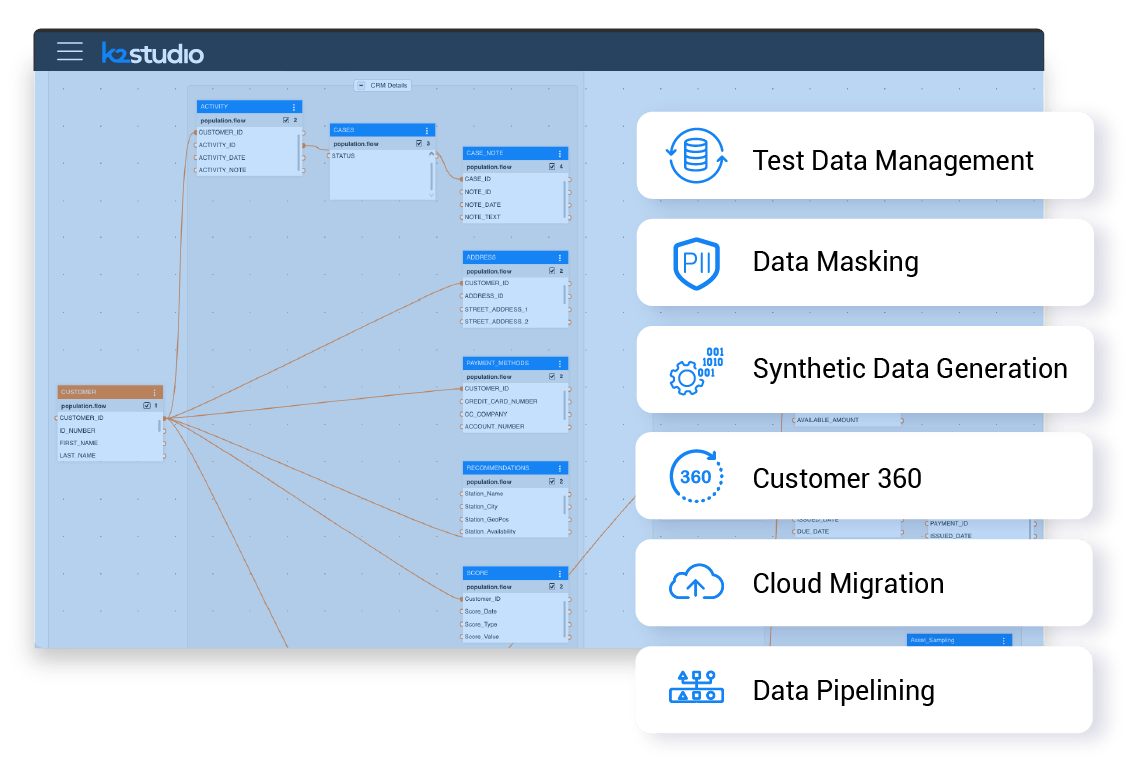

Reusable data products accelerate time to data

Data teams create, package, monitor, and adapt data products that are readily discovered and reused to quickly support new workloads. Common data products include:

- Customer data integration for Customer 360 or Customer Data Hub implementation

- Multi-source data pipelining into a data lake or data warehouse

- Migrating data from on-premise legacy systems to the cloud

- Centralizing multi-domain master data for cross-company consumption

Metadata-driven automation

Metadata drives data integration and management efficiencies

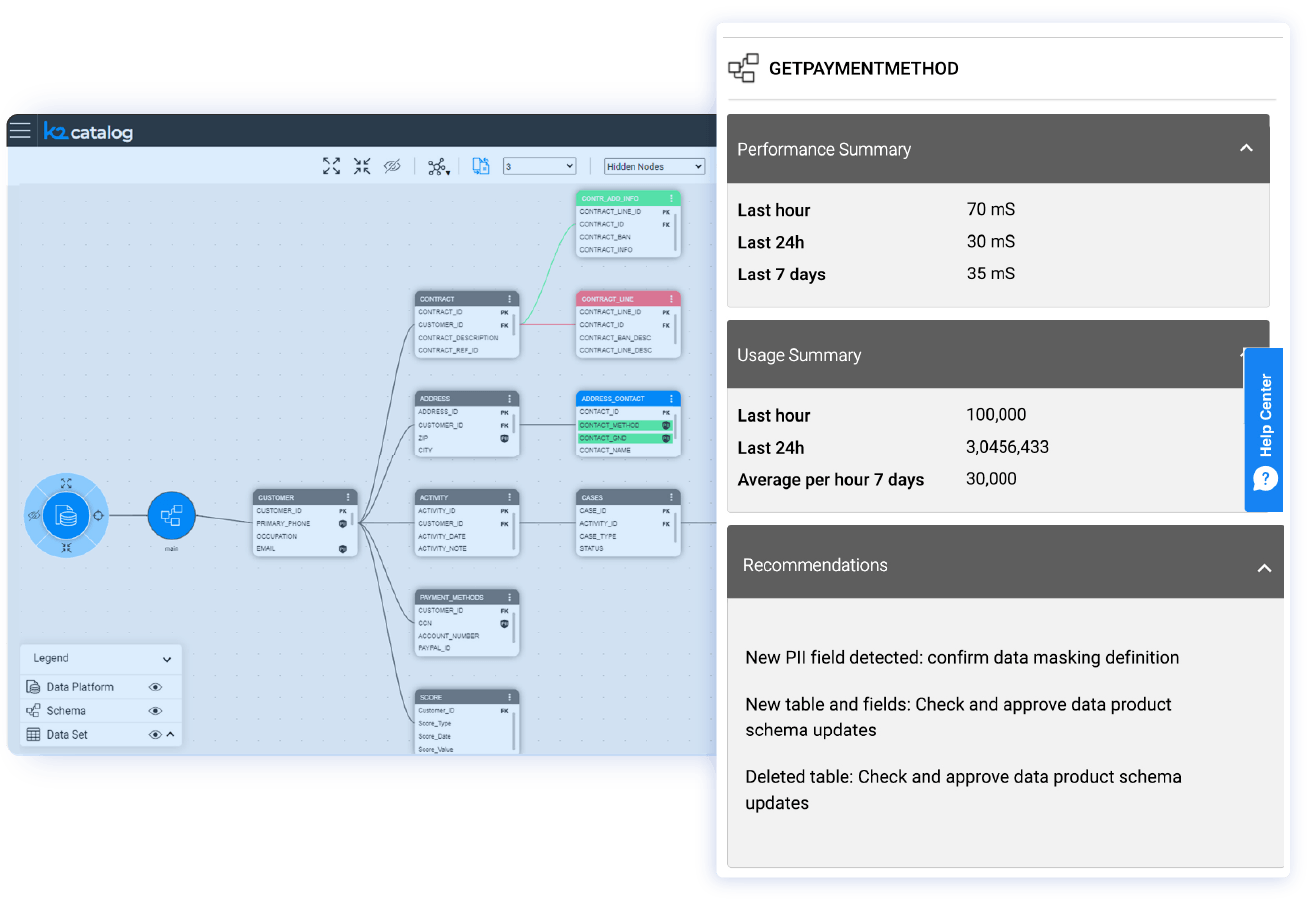

Core to the data fabric architecture is a graph-based data catalog for capturing a company's data assets, including static and active metadata, serving as the foundation for creating the data fabric's semantic layer.

-

Data fabric monitors, analyzes, and acts upon static and active metadata to improve the trust and increase use of data

- Data ingestion flows are auto-generated, sensitive data is automatically anonymized, recommendations for data fabric reconfiguration are raised, and more

Patented approach

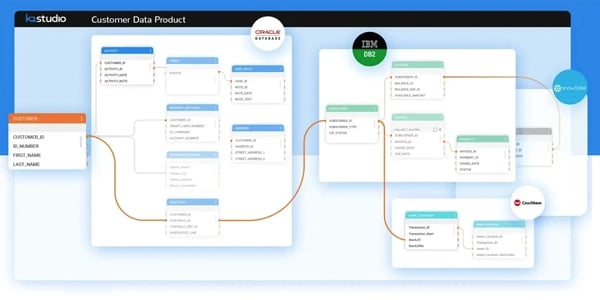

Entity-based data integration

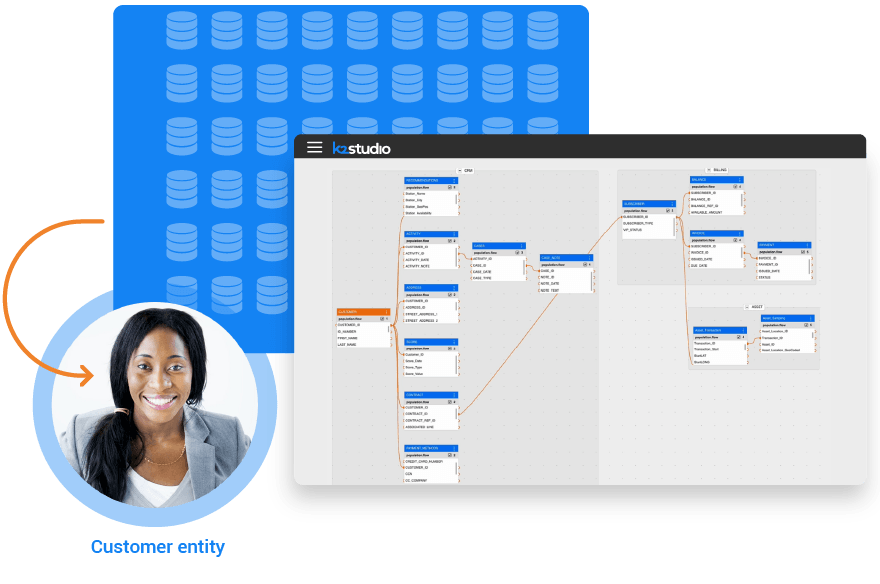

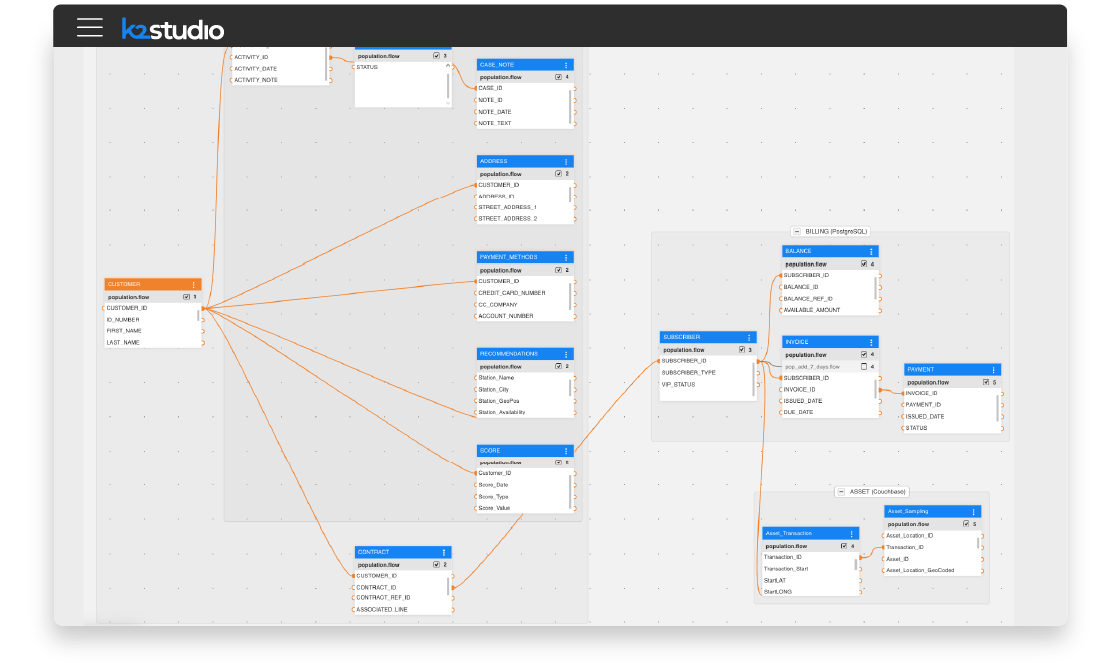

Core to the data fabric architecture is a semantic layer that hides the complexities of the underlying sources. The semantic layer aligns with the company's core business entities around which data is integrated: e.g., customer, product, supplier, loan, order, etc.

-

The data fabric semantic layer is auto-discovered from the underlying source systems

- The semantic layer represents an organization's core business entities

- Data is ingested and organized in the fabric by entities, and delivered to authorized data consumers by entities

Single platform, multiple use cases

Driving operational and analytical use cases

Data fabric fuels operational use cases by delivering real-time, connected data – from any sources into transactional systems. It also prepares and pipelines data into data lakes and data warehouses – in a state that's ready for immediate analysis.

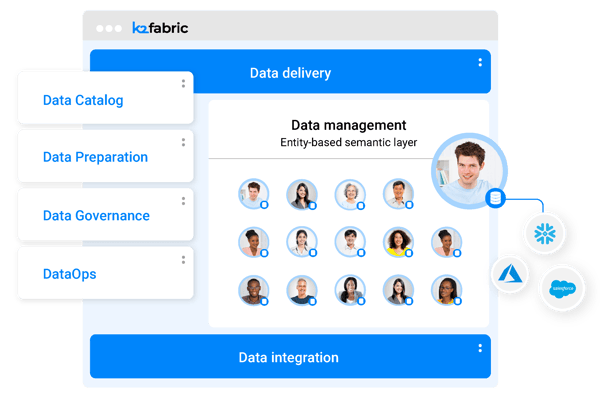

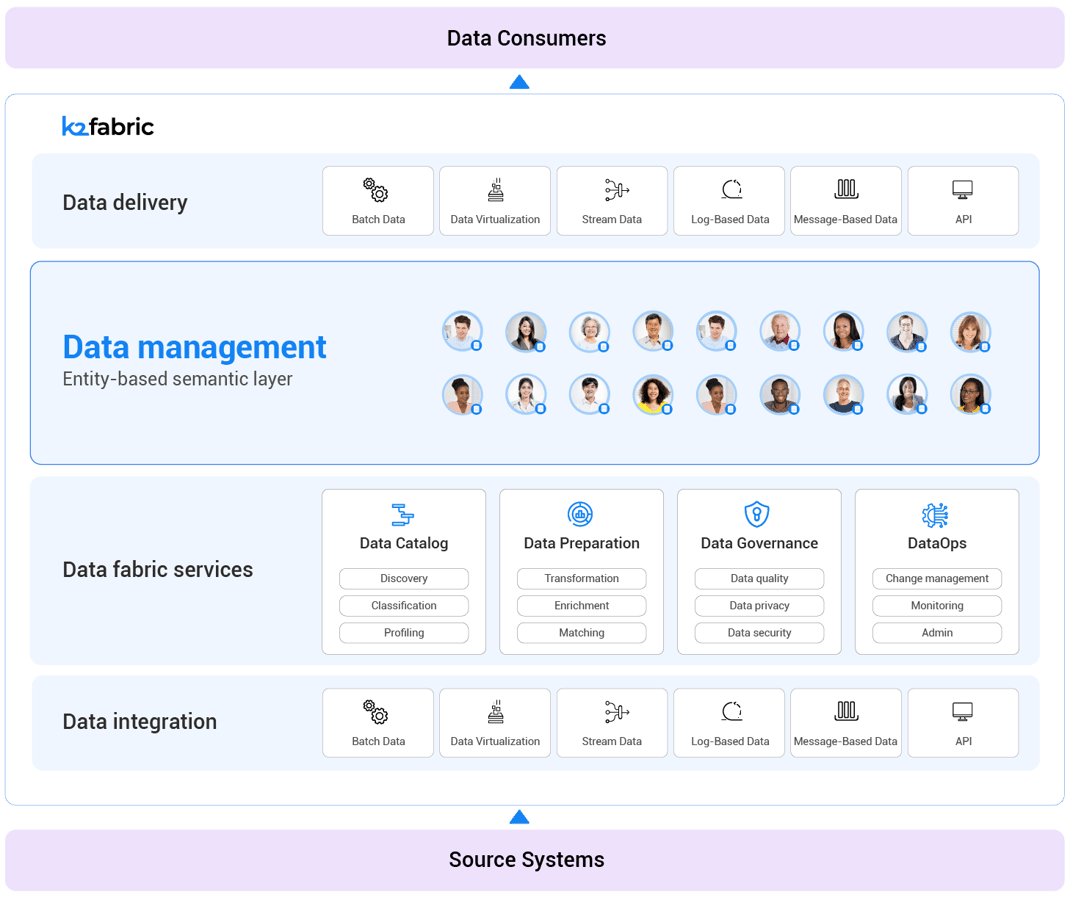

k2view data product platform as a data fabric

Key components of data fabric architecture

Data Integration

From any source, to any target, in any data integration method

Data Catalog

Built-in data discovery, profiling, and classification

Data Preparation

No-code data transformation and data orchestration

Data Governance

Ensure data quality, and enforce data privacy controls

Data Service Automation

Auto-generate web services to access the data fabric

DataOps

Administration, monitoring and change management

Data fabric architecture

frequently asked questions

What is Data Fabric?

Data fabric is an emerging data management pattern, founded on 3 pillars:

- Delivering trusted data: Data fabric connects fragmented data and makes it contextually aware, elevating users' trust in data, and simplifying data access and understanding

- Meta-data driven: Data fabric captures and leverages static (design-time) and active (run-time) metadata to recommend how data can be integrated, organized, and exposed

- Modular architecture: Data fabric is an open architecture, designed to enable the integration of a company's existing data tech stack where it makes sense. It is not meant to be a "rip and replace" of existing data management infrastructure.

What is the difference between data fabric architecture and data mesh?

Data mesh is a data management operating model, while data fabric is a technology architecture.

Data mesh supports a distributed approach to data management, where business domains have the autonomy, knowhow, and tools to create, govern publish, and consume data products.

How is active metadata used in data fabric architecture?

Data fabric uses active metadata - the analysis of runtime metadata, such as data usage, performance, SLA compliance, and cloud costs (where applicable) - to raise alerts and recommendations for continually optimizing how data is integrated, governed, and exposed through the data fabric.

What purpose does the semantic layer serve in data fabric?

In data fabric architecture, the semantic layer hides the complexities of the underlying disparate data sources, and forges a common language between business data consumers and IT data producers. This accelerates access to data, and improves users' understanding and trust in data.

What data integration methods does data fabric support?

Data fabric supports data and application integration in any method, in any combination, per the use case.

Supported integration methods include:

- APIs

- ETL/ELT for bulk data movement

- Streaming

- Change Data Capture (CDC)

- Messaging

- Data virtualization

How is data fabric different vs data lake?

While data fabric is an architecture pattern for delivering connected data with semantic meaning, a data lake is a datastore that captures a company's raw data in its native format.

The data lake is often an integration point into a data fabric and/or a data consumer of the data fabric.

What use cases does data fabric support?

Data fabric architecture supports operational and analytical workloads, for example:

- Customer 360: Real-time customer data integration

- Multi-domain master data management

- Test data management: Provisioning data subsets from higher to lower environments

- Data lake pipelining: preparing and delivering data for analytics

- Cloud migration