RAG transforms generative AI by allowing LLMs to integrate private enterprise data with publicly available information, taking user interactions to the next level.

No Fresh Data: A Key Generative AI Challenge

For organizations that rely on Generative AI (GenAI) to respond to queries from users and customers, getting personalized, accurate and up-to-date responses remains a challenge. Technologies like machine translation and abstractive summarization have greatly improved the way GenAI-powered interactions “feel” – making them more accurate and more personable. Yet the information these utilities can convey remains limited.

The reason? GenAI relies on Large Language Models (LLMs) that are trained on vast amounts of publicly available Internet information. Major LLM vendors (like OpenAI, Google, and Meta) don’t retrain their models frequently due to the time and cost involved. As a result, the data the LLMs draw on, is never up to date. And, of course, LLMs have no access to the incredibly valuable private data within organizations – the very data that enables the personalized responses users need.

The real-time data limitation is a significant obstacle to the mainstreaming of GenAI-powered tools in business. When asked questions to which they have no fact-based answers, LLMs often produce incorrect or irrelevant information, termed hallucinations, due to their reliance on the statistical patterns of their training data.

To address this challenge, enterprises are seeking emerging solutions like retrieval-augmented generation.

Enter RAG

Retrieval-Augmented Generation (RAG) is a generative AI framework that enhances the response capabilities of an LLM by retrieving and injecting into the LLM, fresh, trusted data from your very own knowledge bases and enterprise systems.

Essentially, RAG enables LLMs to integrate public, external information with private, internal data to instantly formulate the most accurate answer possible.

With RAG, LLMs can gain deep understanding into specific areas without having to be retrained – making it an efficient and cost-effective method to keep LLMs current, relevant, accurate, and helpful in various situations.

Get the condensed version of the Gartner RAG report for free.

Understanding the RAG-GenAI Connection

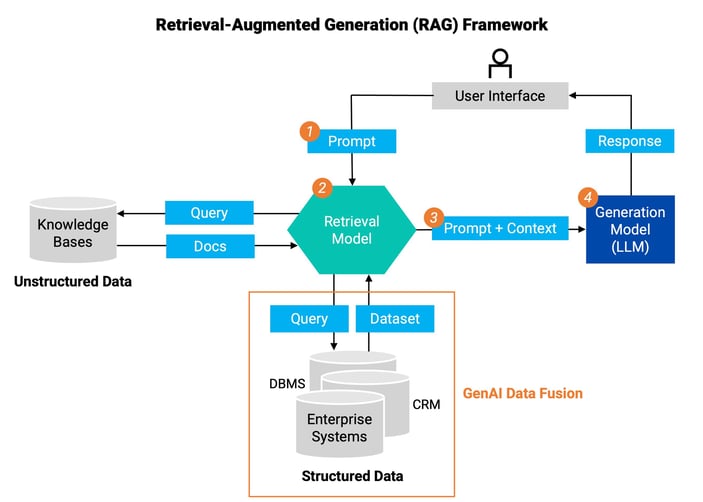

RAG is based on search-based retrieval of unstructured data from knowledge bases, and on a structured data query component, to enhance the relevance and reliability of the automated answers.

As illustrated in the RAG diagram below (inspired by Gartner), the retrieval model (the green hexagon in the center) searches, queries, and provisions the most relevant data based on the user's prompt, turns it into an enriched, contextual prompt, and injects it into the LLM for processing. The LLM, in turn, generates a more accurate and personalized response to the user.

Get the the free Gartner RAG-GenAI report highlights

The Benefits of RAG for GenAI

The RAG-AI connection lets organizations jumpstart their GenAI apps with:

-

Swift implementation

Training an LLM is a time-consuming and expensive undertaking. RAG is a faster and more cost-effective way to inject fresh, trusted data into an LLM, making GenAI easier for businesses to onboard.

-

Personalized information

RAG integrates individual customer 360 data with the extensive general knowledge of the LLM to personalize chatbot interactions and cross-/up-sell recommendations by customer service agents.

-

Enhanced user trust

RAG-powered GenAI responds reliably through a combination of data accuracy, freshness, and relevance – personalized for a specific user. User trust bolsters and even promotes the reputation of your brand.

RAG-GenAI Chatbot Use Case

When you need a quick answer to a simple question, a chatbot can be useful. But conventional GenAI bots can’t access user data, often confounding consumers, and suppliers alike.

For example, an airline’s chatbot responding to a frequent flyer query on upgrading to business class in exchange for miles won’t be of much use by answering, “Please contact frequent flyer support."

But if RAG could enrich the airline’s LLM with that user’s dataset, a more personalized response could be generated, e.g., “Hi Marcel, your current balance is 1,100,000 miles. For flight GH12 from Boston to Chicago, departing at 2 pm on August 7, 2024, you could upgrade to business class for 40,000 miles, or to first class for 100,000 miles. What would you like to do?”

By addressing the limitations of an LLM’s training data, a RAG chatbot can provide more nuanced, reliable, and meaningful responses. By improving the overall accuracy and relevance of chatbot interactions, RAG dramatically enhances user trust in both the bots themselves and in the organizations that operate them.

And when GenAI data fusion is applied, a human in the loop becomes unnecessary.

Entity-Based Data Products Spark RAG-GenAI Apps

Entity-based data products are fueling the RAG GenAI revolution. These reusable data assets combine data with everything needed to make them accessible to RAG – so that fresh, trusted data can be infused into LLMs in real time.

By taking a data-as-a-product approach to RAG-GenAI, you can deliver the entity data you need from any underlying data source, and then transform the data, together with context, into relevant prompts. These prompts are automatically fed into the LLM along with the user query, allowing the LLM to generate a more accurate and personalized response.

A data product platform can be accessed via API, CDC, messaging, or streaming – in any combination – enabling unification of data from multiple source systems. It enables multiple RAG use cases, producing insights derived from your own internal information and data, to:

-

Accelerate issue resolution

-

Create hyper-personalized marketing campaigns

-

Generate personalized cross-/up-sell recommendations for call center agents

-

Detect fraud by identifying suspicious activity in a user account

Discover K2view GenAI Data Fusion, the RAG tool for both structured and unstructured data.