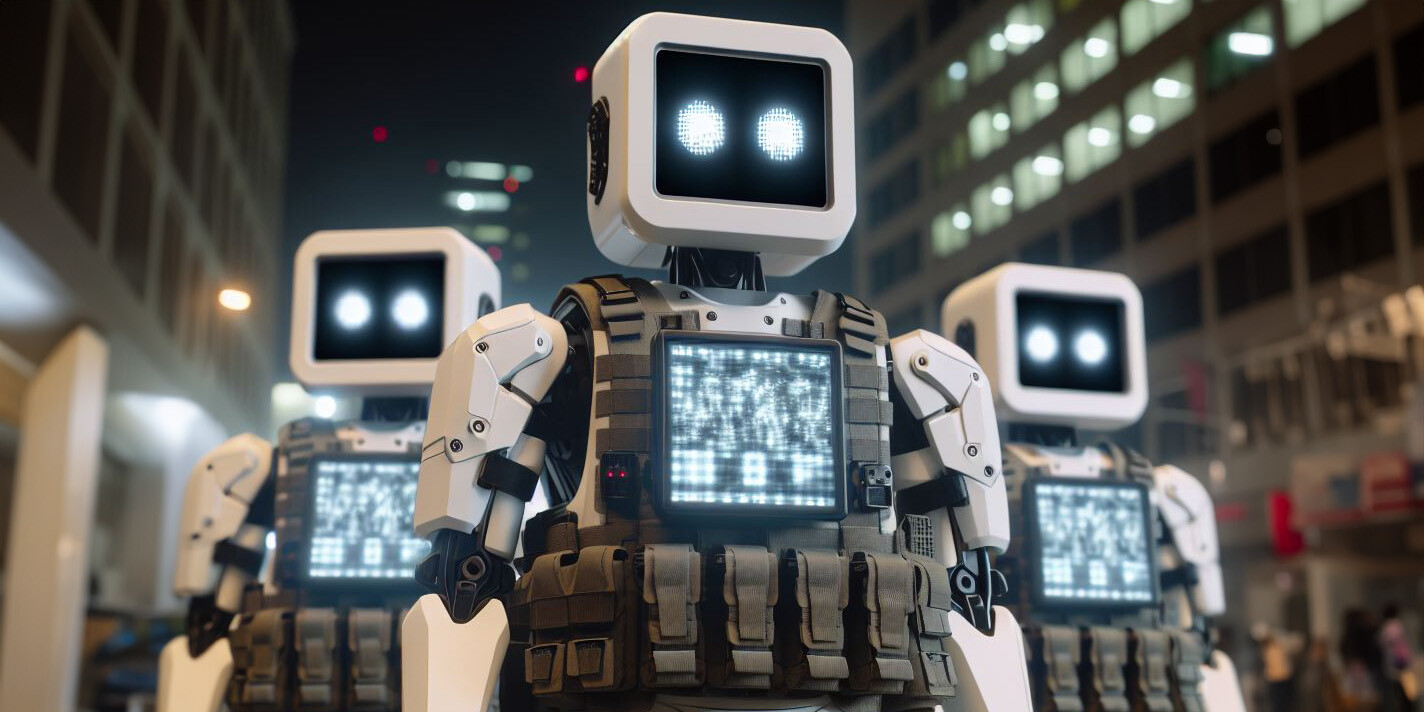

A multi agent LLM system is comprised of multiple intelligent agents, powered by a large language model, that work together to accomplish complex tasks.

What is a multi agent LLM system?

A multi agent LLM (Large Language Model) system is like a generative AI (GenAI) dream team, in which individual LLM agents, each with its own unique area of expertise, come together to execute tasks. Each agent handles a different part of the job, while communicating with the others, to make a collective decision.

In a diverse LLM agent architecture, one agent might specialize in data analysis, another in generating creative text, while yet another focuses on filtering for relevance or accuracy. By sharing information and coordinating actions, these agents combine their strengths to solve tasks faster and more efficiently.

The multi agent LLM approach is particularly valuable for highly complex tasks, from customer support to real-time data processing. It’s a good fit for any chain-of-thought reasoning process, where different types of knowledge and skills are required at the same time.

Multi agent LLMs also make it easier for organizations to balance generative AI use cases, customize responses for specific needs, and deliver a more dynamic, human-like interaction. It’s a powerful example of GenAI teamwork that harnesses diverse abilities to enhance both productivity and precision.

Single agent LLM vs multi agent LLM

Single agent and multi agent LLMs differ significantly in how – not to mention how well – they handle complex jobs.

True to its name, a single agent LLM operates independently. It processes tasks one at a time, within a limited frame of reference. This approach works well for simple tasks, but not for complex jobs, where accuracy and context are critical. For instance, single agent LLMs can produce AI hallucinations, or false outputs that seem true. This phenomenon is especially problematic in fields that require high levels of precision, like finance, medicine or law.

Multi agent LLMs, on the other hand, work collaboratively, with each agent focusing on a different aspect of the task at hand. This teamwork allows for greater accuracy since agents can check each other’s work. It also enables better resolution of complex tasks, since long texts or conversations can be split between agents, while still maintaining continuity and understanding, over time.

Multi agent LLM systems are also more efficient, since they multitask. Instead of processing one subtask at a time, they divide and conquer, resulting in better response times and more nuanced and reliable outputs.

|

Aspect |

Single agent LLM |

Multi agent LLM |

|

Complexity |

Works alone to accomplish simple tasks, but unsuitable for complex ones. |

Operates in a multi-tasking team, better suited to handling complex tasks. |

|

Accuracy |

Prone to hallucinations, a problem in fields requiring high accuracy. |

Reduces errors through collaborative verification, for improved accuracy. |

|

Context |

Loses context, due to difficulty with long texts or conversations. |

Maintain contexts, by dividing up long texts or conversations among agents. |

|

Efficiency |

Processes subtasks sequentially, increasing response times. |

Operates in parallel, with different agents handling different tasks. |

|

Decision-making |

Lacks collective wisdom, limited to a single perspective or approach. |

Combines the strengths and expertise of different agents, to arrive at better decisions. |

How do multi agent LLM systems work?

LLM agents, and LLM function calling, are elements of GenAI frameworks like Retrieval-Augmented Generation (RAG), facilitating LLM text-to-SQL functionality, chain-of-thought prompting, and adherence to LLM guardrails.

In a multi agent LLM system, tasks are completed via collaboration between specialized agents, each with its own specific skills and focus. Here’s a step-by-step look at how they work:

-

User query

It all starts with a user’s input – a high-level tasking or complex query.

-

Task breakdown

The system divides the main task into smaller, manageable subtasks. Each subtask is designed to leverage a specific LLM agent’s unique strength.

-

Agent assignment

The subtasks are then assigned to different agents based on their roles and capabilities.

-

Execution

Each agent deals with its assigned subtask. The agents use their LLMs to reason, devise plans, and complete their tasks by accessing tools and stored information.

When agents are empowered with real-time retrieval capabilities, Agentic RAG allows them to autonomously seek and apply relevant knowledge – improving precision and adaptability across the entire system. -

Communication

Agents communicate with each other throughout the process. If a subtask depends on the output of another agent, the agents share information.

-

Assembling the output

Finally, the system compiles the outputs from all agents, combining them into a single, cohesive response to the user’s query.

Multi agent LLM examples

Let’s consider a multi agent LLM system tasked with managing a corporate event, like organizing a conference. In this case, there might be a variety of specialized agents, each handling different aspects of the planning process, including a:

-

Venue agent

This agent locates and reserves the venue. It first searches through various event spaces, factoring in crowd capacity, layout options, amenities, and budget. It then checks availability and manages the bookings.

-

Speaker agent

This agent identifies and communicates with potential speakers. It handles everything from finding speakers with relevant expertise to coordinating schedules and managing contracts.

-

Catering agent

Responsible for finding and arranging food services, this agent interacts with caterers to create menus that align with dietary needs, budget, and guest preferences.

-

Logistics agent

This agent oversees transportation and accommodation for attendees and speakers. It books flights and hotels that are conveniently located to the conference center.

-

Marketing agent

This agent focuses on promoting the event. It manages social media, contacts media outlets, and coordinates with graphic designers and content managers to proper branding.

Coordinating multi-agent LLMs with TAG

Table-Augmented Generation (TAG) can help multi-agent LLM systems collaborate better by giving each agent access to multi-source database tables in real time. With TAG, agents can share up-to-date information pulled from different systems, making it easier for them to coordinate on tasks like troubleshooting, order fulfillment, or customer support. This approach allows each agent to work with the latest and most complete data, reducing confusion and overlaps that often happen when information is siloed. TAG helps multi-agent environments operate more smoothly and reach decisions based on a shared, accurate view of the data landscape.

To ensure each agent operates with relevant context and consistent data, a Model Context Protocol (MCP) can define how structured and unstructured data is passed into the model. MCPs help multi-agent systems maintain alignment, reduce redundancy, and improve prompt grounding by standardizing the way contextual inputs are constructed and injected into each LLM’s prompt window.

Get your multi agent LLM with GenAI Data Fusion

GenAI Data Fusion, advanced RAG tools by K2view, optimizes the way multi agent LLMs work by integrating AI prompt engineering with data retrieval and generation technologies. It’s ideal for multi agent LLM use cases that require a series of complex interactions.

With GenAI Data Fusion you get:

-

Real-time data access, retrieval, and LLM augmentation.

-

Protection of PII (Personally Identifiable Information) and other sensitive data.

-

Inflight insights, as well as data service access request management.

-

Multi-source data collection from enterprise systems via API, CDC, messaging, or streaming.

GenAI Data Fusion employs LLM agents to optimize the response to any user query.

Discover K2View AI Data Fusion, the RAG tools

with multi agent LLM functionality built in.