Why is enterprise data crucial for GenAI apps?

LLMs don’t know your business

LLMs provide generic responses – they don’t know your customers, services, employees, and suppliers.

Most of the answers lie in your app data

The data in your business systems, like CRM, ERP, and Billing, is key to answering enterprise GenAI questions.

LLMs can generate false information

Without access to your business data, LLMs may hallucinate and damage your reputation and customer trust.

Prepare your enterprise data for RAG

Our patented Micro-Database technology is a paradigm shift in readying your data for GenAI. It's a new way of organizing enterprise data for retrieval augmented generation (RAG), essentially doing for multi-source application data what chunking, embedding, and vector DBs do for document-based data.

In customer service use cases, for example, the data for each customer is dynamically organized into its own Micro-Database, which is micro-sized, isolated, and complete with all relevant data about the customer.

Build GenAI agents, grounded by AI-ready data

- 01 No-code agent builder

- 02 Chain-of-thought orchestration

- 03 Text-to-SQL automation

- 04 Real-time data retrieval

- 05 100s of prebuilt functions

01No-code agent builder

Build your AI data agents in minutes using our no-code Studio, which includes a built-in testing and debug tool.

02Chain-of-thought orchestration

Orchestrate chain-of-thought prompting and LLM reflection to maximize LLM response accuracy.

03Text-to-SQL automation

Dynamically transform any user prompt into SQL queries that retrieve the needed enterprise data for LLM augmentation.

04Real-time data retrieval

Retrieve fresh and compliant data from the Micro-Database, at conversational latency, and within required guardrails.

05100s of prebuilt functions

Pick and config the LLM functions your data agent will use. From basic API calls and data transformations, to sophisticated data parsing and analysis – we've got you covered.

Closing the GenAI data gap

The K2view RAG tool injects enterprise data into your LLM for GenAI success.

Data guardrails

Conversational latency

Responding to user prompts in millisecs to support operational GenAI use cases.

Trusted data

Controlled AI costs

AI scale

Reliable LLM responses

K2view GenAI Data Fusion enables Pelephone to slash customer service costs and elevate the customer experience

K2view GenAI Data Fusion enables Pelephone to slash customer service costs and elevate the customer experience

-Mar-12-2025-05-23-02-2395-PM.png?width=610&height=580&name=image%20(5)-Mar-12-2025-05-23-02-2395-PM.png)

Maya Bachar Gilad

Chief Information Officer, Pelephone

Power transformative generative AI

use cases with your enterprise data

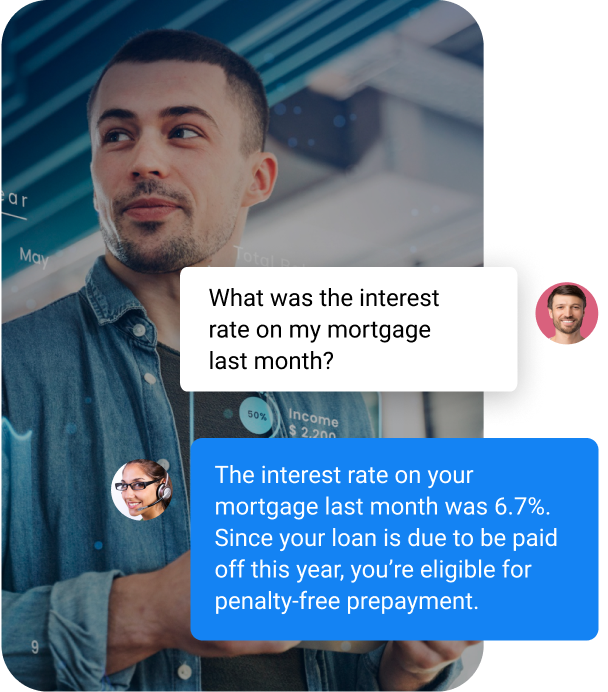

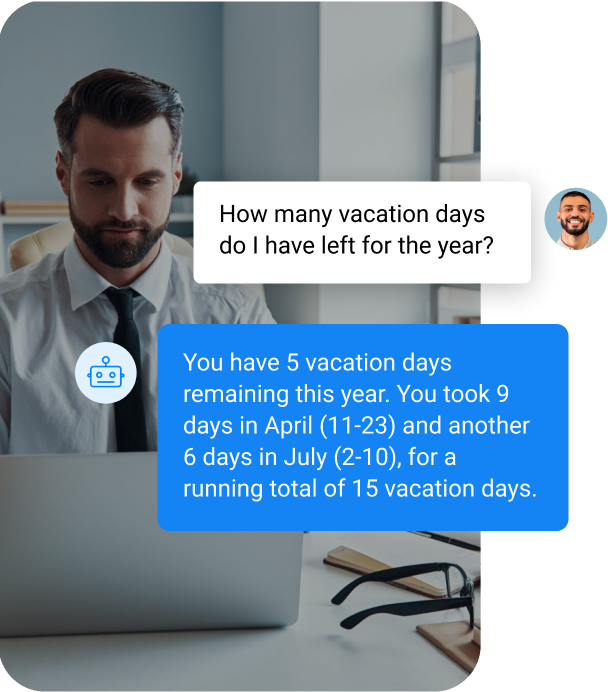

Telco | Customer service chatbot

RAG can elevate your users' chatbot experiences with personalized answers, and reduce your costs with higher first contact resolution rates.

Banking | Call center assistance

RAG can provide your call center reps with 360° customer views and real-time insights, for greater customer satisfaction and reduced call times.

Enterprise | Internal HR applications

RAG can inject employee data into your HR app to provide users with individually tailored responses about attendance, benefits, and vacation time.

market survey

Just 2% of US and UK businesses are ready for production GenAI

Our 2024 Enterprise Data Readiness for GenAI survey reveals the top challenges enterprises face as they work toward GenAI deployments.

The most significant GenAI roadblocks lie in the data infrastructure, particularly in the areas of data accessibility and latency, data privacy, and security.

.png?width=841&height=613&name=GenAI%20survey%20(3).png)

-1.png?width=501&height=273&name=GenAI%20survey%20news%20thumbnail%20(1)-1.png)