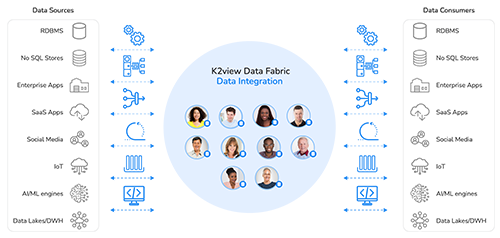

Continuous data integration and delivery

From any source to any target

at AI speed and scale

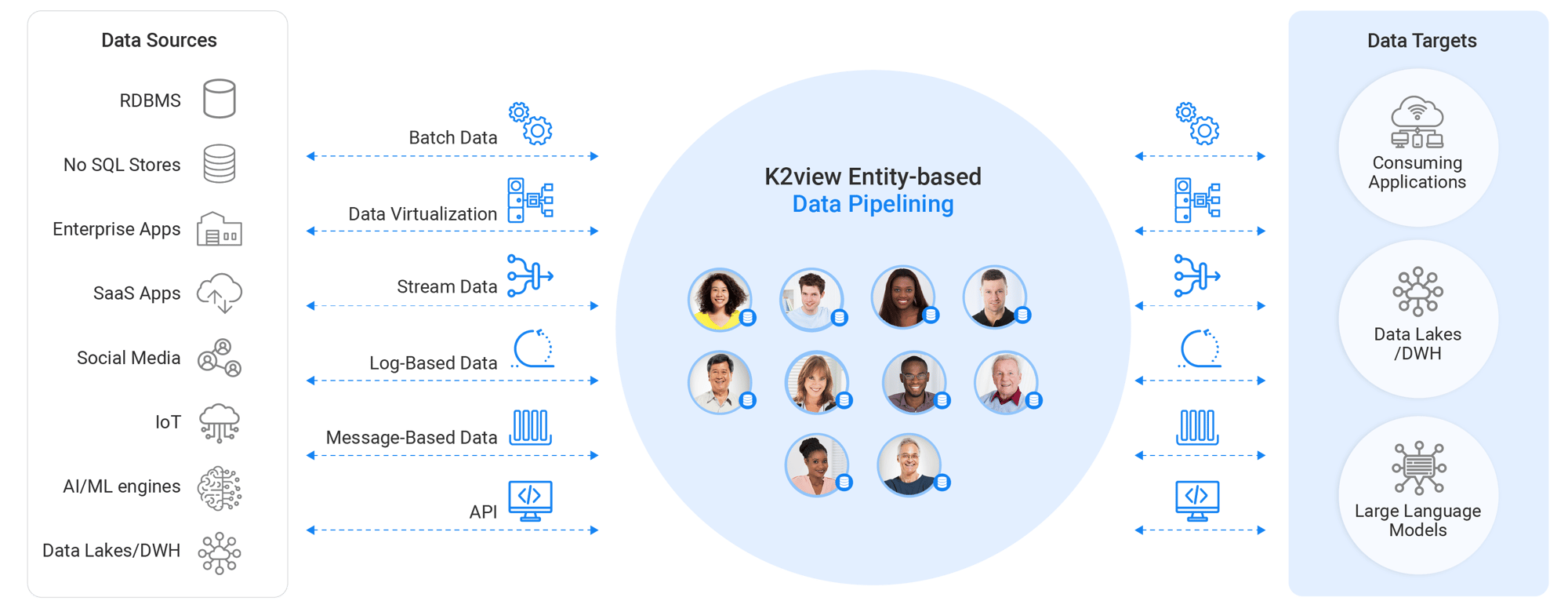

Leverage AI to integrate and deliver data from any data source – on-prem or cloud – to any data consumer, using any data delivery method: bulk or reverse ETL, data streaming, data virtualization, CDC, message-based data integration, and APIs.

AI automation + active metadata

- 01 AI automation copilot

- 02 Pipeline auto-recovery

- 03 Schema drift prevention

- 04 Self-optimization

01AI automation copilot

AI automation copilot

Auto-discover and model your data products.

Auto-classify, generate, and document your data pipelines.

02Pipeline auto-recovery

Pipeline auto-recovery

Automatically recover your data pipelines after failures without data loss, for seamless data ingestion and integration.

03Schema drift prevention

Schema drift prevention

Auto-detect changes, perform impact analysis, and provide recommendations and actions for continuous integration.

04Self-optimization

Self-optimization

Analyze metadata to continuously improve resilience, optimize data integration performance, and control costs.

Robust, reusable data integration

Entity-based data integration

A data product approach

Ingest and deliver data by business entities

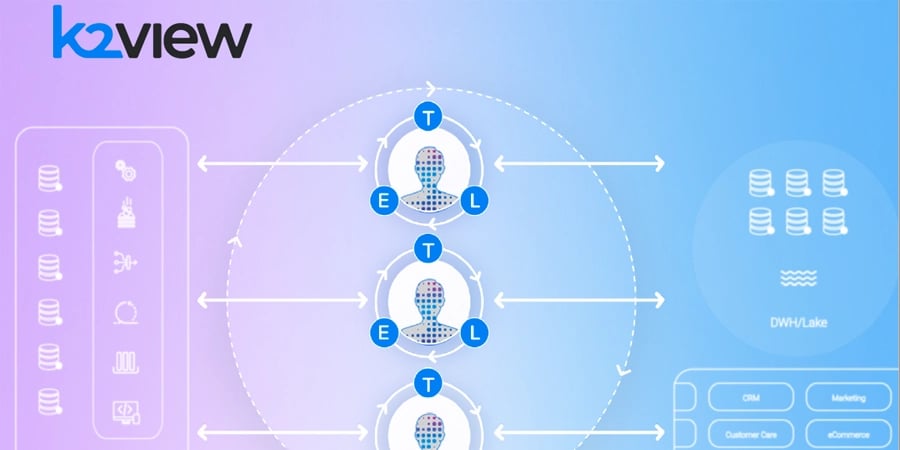

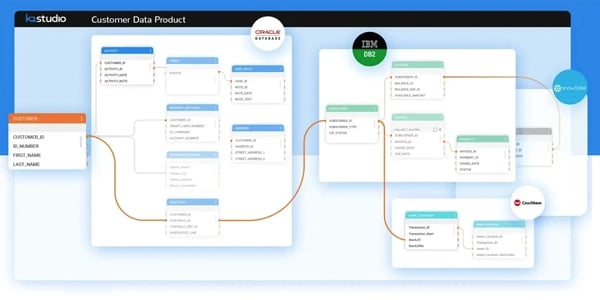

K2view takes a data product approach to data integration.

Data engineers create and manage reusable data pipelines that integrate, process, and deliver data by business entities – customers, employees, orders, loans, etc.

- The data for each business entity is ingested and organized into its own high-performance Micro-Database™.

- The schema for a business entity is auto-discovered from the underlying source systems.

- Data masking, transformation, enrichment, and orchestration are applied – in flight – to make entity data accessible to authorized data consumers, while complying with data privacy and security regulations.

.png?width=657&height=426&name=integration%20(1).png)

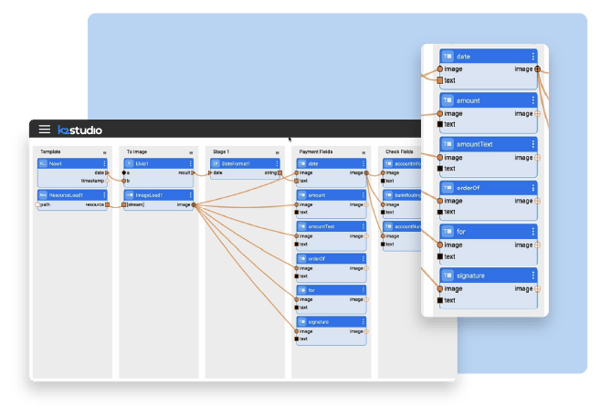

Quick and easy

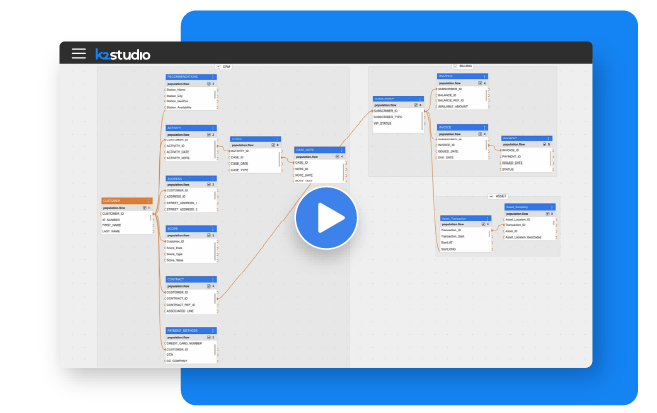

No-code data integration

and transformation

K2view data integration includes no-code tooling for defining, testing, and debugging data transformations to address simple and complex needs, with ease. Data transformation is applied in the context of a business entity, making performance lightning fast, in support of high-scale data preparation and delivery.

-

Basic data transformations

Data type conversions, string manipulations, and numerical calculations. -

Intermediate data transformations

Lookup/replace using reference files, aggregations, summarizations, and matching. -

Advanced data transformations

Complex parsing, unstructured data processing, combining data and content sources, text mining, correlations, custom enrichment functions, data validation, and cleansing.

Linear scalability on commodity hardware

Real-time performance

at AI scale

K2view Data Product Platform scales linearly to manage hundreds of concurrent pipelines, and billions of Micro-Databases, in support of enterprise-scale operational and analytical data integration workloads.

- In-memory computing, patented Micro-Database technology, and a distributed architecture are integrated to deliver unmatched source-to-target data performance.

- K2view can be deployed on-prem or in the cloud, in support of a data mesh architecture, data fabric architecture, or data hub architecture.

- Data pipelining monitoring and control is built in, with observability at the business entity level.

Key features and capabilities

Augmented data integration tools for any task

No-code/low-code tooling

Support for every level of data engineering expertise

Connection to any source

Built-in connectors to hundreds of data sources and applications

Any delivery style

Including CDC, ETL, streaming, and messaging

Data virtualization

Data virtualization support, for an easy-to-access logical abstraction layer

Data transformation

Data transformation of unprecedented sophistication

Auto-discovery

Metadata discovery and data classification, for quick implementation

Data quality

Inflight data quality is enforced by customizable business rules

Deploy anywhere

Support for cloud, hybrid, or on-prem data integration

-1.png?width=600&height=445&name=Data-IntegrationData-Integration-2_Enterprise%20(1)-1.png)