The growing dependence on big data is often met with statistics showing that many data lake projects fail because they did not adhere to data quality management best practices.

Table of Contents

False Assumptions About Data Lakes

The Disconnect Between Data Lakes and Data Quality Management Best Practices

Data Fabric Enables Data Quality Management Best Practices for Your Data Lake

Making Your Data Lake a Success with Data Quality Management Best Practices

False Assumptions About Data Lakes

Gartner’s estimation that 60% of big data projects would never meet their goals in 2017 turned out to be too conservative, with Nick Heudecker, a former senior analyst for the company, upping the failure rate to 85% in 2019.

Why does this happen? One reason may be related to how we perceive and treat data lakes. Data lakes were originally embraced by enterprises that considered them a self-contained solution, without any need for data quality best practices. However, the same organizations that wholeheartedly dumped all their raw data into data lakes, woke up to find that their data was difficult to understand, cleanse, or use.

A flood of raw data, without context, renders data lakes useless.

The Disconnect Between Data Lakes and Data Quality Management Best Practices

Data lakes gather raw data from multiple sources into one place, without the necessary context, and details regarding their origins. Data quality management best practices are needed to transform this data into actionable insights.

Here are 5 misconceptions that often lead to data lake failures:

-

Bringing multiple data sources together, without adhering to data quality management best practices, does not constitute a unified area where all data is accessible. Each source requires specific governance standards to support its data, and today’s technologies are unable to offer such flexible capabilities. The result is slow data processing, that lacks consistency, and achieves the opposite of what we aim to do. The problem is compounded when dealing with a high volume of data requests, which may lead to performance issues, and the need for additional storage.

-

We like to think that every person in an enterprise is data literate, and can enjoy the benefits offered by data lakes. In reality, where IT teams build data lakes to help data consumers, data engineers often communicate in a language that their business counterparts can’t understand. So, the data remains inaccessible to most people, and is rarely put to operational use.

-

A one-size-fits-all approach to data provisioning doesn’t work. Ideally, data quality management best practices are based on business needs, in order to address them accordingly. Placing the organization’s data in a single place, and then expecting everyone to fend for themselves, results in poor data quality – and turns your data lake into a data swamp.

-

If the data is not connected to its origins, there’s no business context. The data lake isn’t going to manage itself. The data quality and integration levels must be actively handled, for example, and the original business logic must be understood.

-

Time and volume affect a lake’s data quality, making it hard to maintain its clarity and freshness. The data that once served the business so well, may now be outdated and irrelevant. The more we continue to stockpile data without adequately managing it, the bigger the risk of contaminating the lake. And, if that’s not enough, the massive amounts of data stored in the lake, increase storage costs without offering any additional value in return.

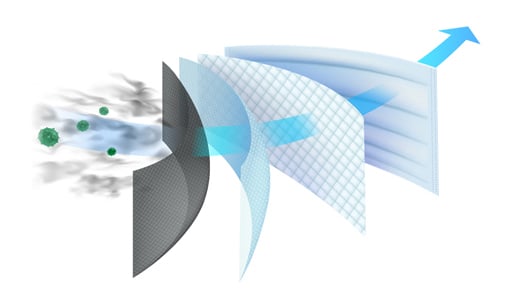

Entity-based data pipelining filters the data entering the lake –

cleansing, formatting, enriching, and anonymizing it – on the fly.

Data Fabric Enables Data Quality Management Best Practices for Your Data Lake

The latest data quality management best practices should be followed, specifically those that:

-

Don’t consider a data lake an instant solution on its own

-

Support an enterprise data pipeline and everything it entails, including data cleansing, formatting, enrichment, and data masking – on the fly

-

Recommend an automated data catalog, providing for data lineage

This kind of enterprise data pipelining approach is available as part of a Data Product Platform. A data product is a data schema that captures all the attributes of any entity important to the business (like a customer, product, or location) from all source systems. All relevant data for a business entity is collected, and moved through a data pipeline to your data lake, ensuring that your data is always complete, consistent, and accurately prepared – and ready for analytics and operational workloads.

Making Your Data Lake a Success with Data Quality Management Best Practices

The successful data lake is based on data quality management best practices, enabled by an entity-based data platform. With embedded MDM tools, data governance tools, and data integration tools, Data Product Platform represents an integrated, connected data layer that's always protected, accurate, and in sync. It also comes with the latest entity-based ETL solution (eETL) that overcomes all data lake challenges, and provides precise insights when they’re needed most.