Active retrieval-augmented generation improves passive RAG by fine-tuning the retriever based on feedback from the generator during multiple interactions.

Passive vs active RAG

Text generation is the process of creating natural language text from inputs like prompts and queries. Text generation is usually based on Large Language Models (LLMs) trained on massive amounts of publicly available information (usually via the Internet). The problem is that LLMs don’t have access to all the relevant information or data needed to generate accurate, personalized responses.

To address this issue, researchers came up with the idea of augmenting LLMs with an enterprise’s own internal knowledge bases and systems – called Retrieval-Augmented Generation (RAG).

Simply put, RAG consists of 2 components: a retriever and a generator. The retriever identifies and chooses the most relevant data from the internal sources based on the prompt or query. The generator integrates the retrieved data into the LLM, which then produces a more informed answer.

Depending on how the retriever and the generator interact with each other, RAG-LLM integration can be passive or active – leading to a passive vs active RAG comparison in the next 2 sections.

Passive retrieval-augmented generation

Passive RAG is characterized by one-way interaction between retriever and generator. Basically, the retriever chooses the most relevant internal data and passes it on to the generator. The generator, in turn, produces the response without any additional help from the retriever.

So, the retriever can’t adjust its selection based on partial text created by the generator, and the generator can’t request additional information from the retriever during the generation process.

Although passive RAG is relatively simple – with one-step retrieval and one-step generation – it has its limitations, such as the:

-

Retriever not being able to choose the most relevant data, because it lacks context in terms of both the question and the questioner

-

Generator not being able to use the retrieved data in the best way, due to incompleteness, irrelevance, or redundancy

-

Framework coming up with contradictory or inconsistent answers, since it can’t verify and/or update the retrieved data

Passive RAG examples:

|

Method |

Retriever basis |

Generator basis |

|

RAG (Lewis…, 2020) |

Bi-encoder |

Pre-trained language model |

|

REALM (Guu…, 2020) |

Masked language modeling, trained with the generator |

|

|

Kaleido-BERT (Zhu…, 2020) |

Graph neural network objective, with a transformer encoder |

Active retrieval-augmented generation

Passive RAG is characterized by two-way interaction between retriever and generator, in which both communicate with each other during the generation process to update the retrieved data and the generated text on the fly.

With active RAG, the retriever can choose different data elements for different generation steps, based on partial text produced by the generator, and the generator can request additional or supplementary data from the retriever during the generation process, based on its uncertainty.

Although active RAG is more complex – because it entails multiple retrieval and generation steps – it’s also more flexible and may address some of the limitations of passive RAG, such as the:

-

Retriever choosing the most relevant data, since it can put the query and the questioner into better context

-

Generator using the retrieved data more effectively, because it can be matched to the specific generation

-

Framework producing more accurate and relevant answers, due to its ability to verify and/or update the retrieved data

Active RAG examples:

|

Method |

Retriever basis |

Generator basis |

|

ReCoSa (Zhang …, 2019) |

Transformer encoder, with a |

Hierarchical recurrent |

|

ARAG (Zhao …, 2020) |

Bi-encoder, with a reinforcement learning algorithm |

Pre-trained language model |

|

FiD (Izacard & Grave, 2020) |

Bi-encoder, with a fusion-in-decoder mechanism |

RAG summary

Retrieval-augmented generation has the potential to address the shortcomings of traditional conversational AI models. By incorporating trusted enterprise data in the content generation phase, RAG is setting a new standard for AI data readiness, accuracy, and relevance.

RAG GenAI frameworks are beneficial because they can:

-

Integrate your organization's structured data (and unstructured data) with the publicly available information your LLM's been trained on.

-

Sync with your all your source systems, ensuring data quality for AI in the sense that the data fed into your LLM is always compliant, complete, and current.

-

Enhance user trust, with AI personalization.

With one-step retrieval and generation, passive RAG is relatively simple but also limited by the quality and relevance of the existing data.

With multiple retrieval and generation steps, active RAG is more complex but also more flexible in addressing the limitations of passive RAG.

In a 2024 report called, “How to Supplement Large Language Models with Internal Data”, Gartner lets enterprises know how they can prepare for RAG AI deployments more effectively.

Get the Garter report on RAG and LLMs FREE of charge.

It's time to take RAG personally

Until recently, RAG of any kind (passive or active) concerned itself with docs retrieved from your company's knowledge bases, meaning whatever general (as opposed to personal) information it could glean from your vector files.

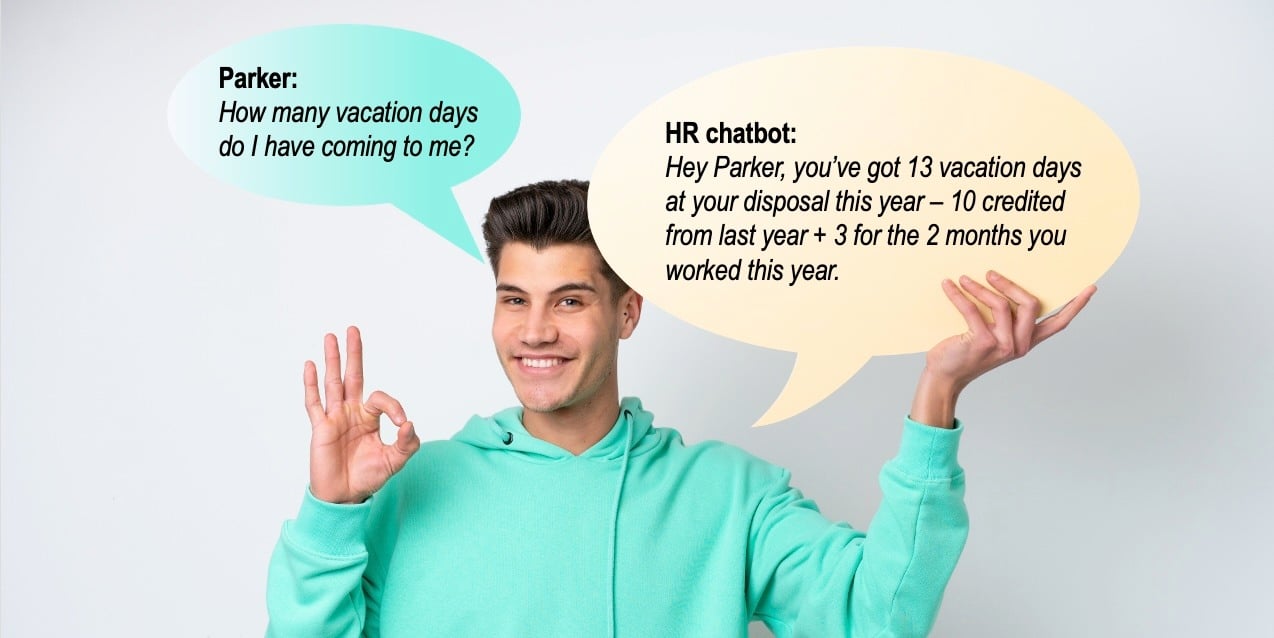

For example, if you wanted to to check your vacation day status in your organization, your RAG chatbot might provide a generalized answer based on a company policy doc retrieved from your knowledge base. Let’s face it, a response like, “Every employee earns 1.5 vacation days for every month worked” isn’t very personal, is it?

Now imagine a framework that could go right to the source, accessing all the data related to you, as an employee, within your organization. No doubt you'd be more satisfied with an answer like, “Hey Parker, you’ve got 13 vacation days at your disposal this year – 10 credited from last year + 3 for the 2 months you worked this year.” That’s where an enterprise RAG comes in.

Enterprise RAG to the rescue

Enterprise RAG accesses customer data from your company's structured data sources for customer-facing use cases, where personalized, accurate responses are required in real time. One of the most popular generative AI use cases, for example, is customer service in which a specially trained chatbot is tasked to reply to any question without a human in the loop.

For this to happen, your enterprise data needs to be organized by data products – according to the business entities relevant for your use case, be they customers, employees, suppliers, products, loans, etc. K2view GenAI Data Fusion stands out among the top AI RAG tools because it manages the data for each business entity in its own, high-performance Micro-Database™ – for real-time data retrieval for operational workloads.

Designed to continually sync with underlying sources, the Micro-Database can be modeled to capture any business entity, and comes with built-in data access controls and dynamic data masking capabilities. And its data is compressed by up to 90%, enabling billions of Micro-Databases to be managed concurrently on commodity hardware.

Discover GenAI Data Fusion, the top-rated RAG tool for 2025.